Innovation > Innovation

PROJECT GUIDELINE

GOOGLE CREATIVE LAB, New York / GOOGLE / 2021

Overview

Credits

Overview

Why is this work relevant for Innovation?

Project Guideline technology was used to complete the first independent 200m sprint, the first 1mile run, and the first 5K by a blind runner. There are a number of accessibility technologies for the blind community, but until now, none that could enable a person who is blind to run independently and safely, as a sighted person would. Project Guideline uses advances in machine learning technology, optimized to run in real-time without internet connection on a consumer mobile phone to enable persons who are blind or low vision to run or walk independently for exercise.

Background

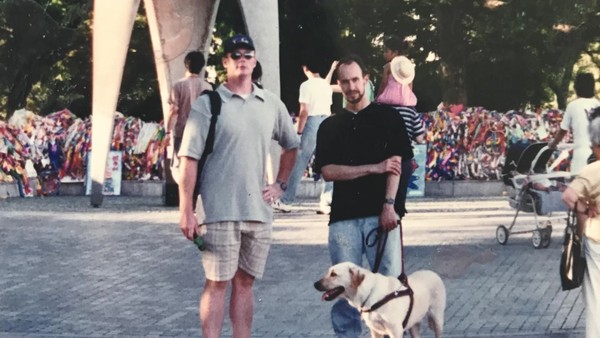

At a hackathon in August 2019, Thomas Panek, accomplished marathon runner and person who is blind, challenged our team: “for greater independence while exercising, can we make navigation for a blind runner possible?” According to the International Agency for the Prevention of Blindness, there are 217 million people in the world with moderate to severe vision-loss and 36 million people who are blind. Vision loss often causes decreases in physical and mental health. The power of innovation in accessibility comes from a collaborative environment with community and industry. In design partnership with Thomas, we set out to explore if we could enable independent running and walking for exercise, for an increased quality of life for persons who are blind or low vision.

Describe the idea

Typically, when someone who is blind or has low-vision runs for exercise, they might use a treadmill, rely on a guide dog, or use a human guide who is tethered to them. In many cases, they may have been unable to run independently since becoming blind or low-vision. We worked with Thomas, a blind runner, to better understand what he would need to run independently. We set out to explore if the technology most people have in their pockets, headphones and a consumer smartphone, plus a line painted on the ground could enable independent running for Thomas? Could we build accessibility technology that is economically accessible and doesn’t rely on specialty hardware? Could we build a solution that would run entirely on-device, without internet connection? We were successful in achieving all of our goals; using consumer hardware to build an on-device machine learning system to enable Thomas to run

What were the key dates in the development process?

September 2019: Thomas, blind marathon runner and CEO, joins hackathon and asks his team to explore if technology can enable independent running for the blind.

December 2019: Team and Thomas hold three day offsite sprint at The Armory Track, an indoor Olympic 200m speed track in NYC. Blindfolded team members walk/run laps on the track with a tethered sighted partner runner and then with a guide dog. A prototype for the audio navigation system is developed, tested, and refined with Thomas.

January 2020: Team and Thomas hold another three day offsite sprint at The Armory Track. An OpenCV computer vision line tracking prototype is tested with an advanced version of the audio guidance system. Thomas successfully completes 8 consecutive laps on the 200m track, equally 1 mile.

February & March 2020: Team is collecting training data for ML system.

May 2020: Team develops data synthesis pipeline; using Blender, an open source 3D creation suite. With this data synthesis pipeline, the team can programmatically create virtual worlds with running paths, guidelines, and various adversarial objects. This synthesized data is used along with in-domain real-world collected data to train the ML models.

Summer 2020: Team refines the ML models and post processing pipelines. They test the evolving systems outdoors in isolation. Team develops a computer based evaluation pipeline to test model performance on eval datasets.

August 2020: Team secures outdoor test site, Ward Reservation, a Westchester County park in Pound Ridge, NY. A permanent guideline is installed on a 600m stretch of curving and undulating roadway. Thomas runs independently for the first time in 25 years while his wife and four children look on. He spends the day running as fast as his lungs and legs will carry him in an emotional day for all participants..

October 2020: Team works with New York Road Runners and NYC agencies to put a painted guideline in New York’s Central Park Upper Loop, a 1.42 mile circuit spanning East to West Drive, from 110th to 102nd Street. Team collects data for ML training and tests the current system with Thomas. The line is removed after 24 hours.

November 2020: Team puts another line in Central Park in the same location, again with the coordination and cooperation of NYRR and NYC agencies. Thomas runs NYRR’s “Run for Thanks” 5K race independently using only the Guideline technology to guide him at a 7min mile pace.

November 2020: On the same day as Thomas’ race, Project Guideline is shared with the world.

Describe the innovation / technology

Using advances in on-device machine learning, we built a system to enable people who are blind or low-vision to utilize their Android devices to run or walk independently. Wearing their phones around their waist with a custom harness, the phone’s camera is angled to view the path and the pre-painted guideline ahead. The machine learning system receives the camera feed and segments the line from the environment. A post-processing system smooths the machine learning model output and provides users real-time stereo audio feedback to approximate their position and help them follow the line. As they drift left, they hear a signal increasing in volume and dissonance the further away they drift. The same happens if they drift right. When running on or near the line, like an audio tunnel. The system runs completely locally on-device, without an internet connection. We’re currently working to increase the reliability and robustness of our ML model, refine the experience design and audio feedback systems, and add new ML features.?

Describe the expectations / outcome

In August 2020, Thomas used our system to run independently for the first time in 25 years, since going blind at age 25. A few months later in November, he used the system to independently run a New York Road Runner 5K virtual race in New York City’s Central Park at a 7min mile pace. In addition to the great press we received at launch, we also fielded 150+ requests for partnership and trial from the leading vision and accessibility non-profits and research institutions; paving the way for us to further develop the technology and to get it out to more people. ?

More Entries from Early Stage Technology in Innovation

24 items

More Entries from GOOGLE CREATIVE LAB

24 items