Innovation > Innovation

FOCUSMOTION

R/GA, New York / FOCUSMOTION / 2016

Awards:

Overview

Credits

Overview

CampaignDescription

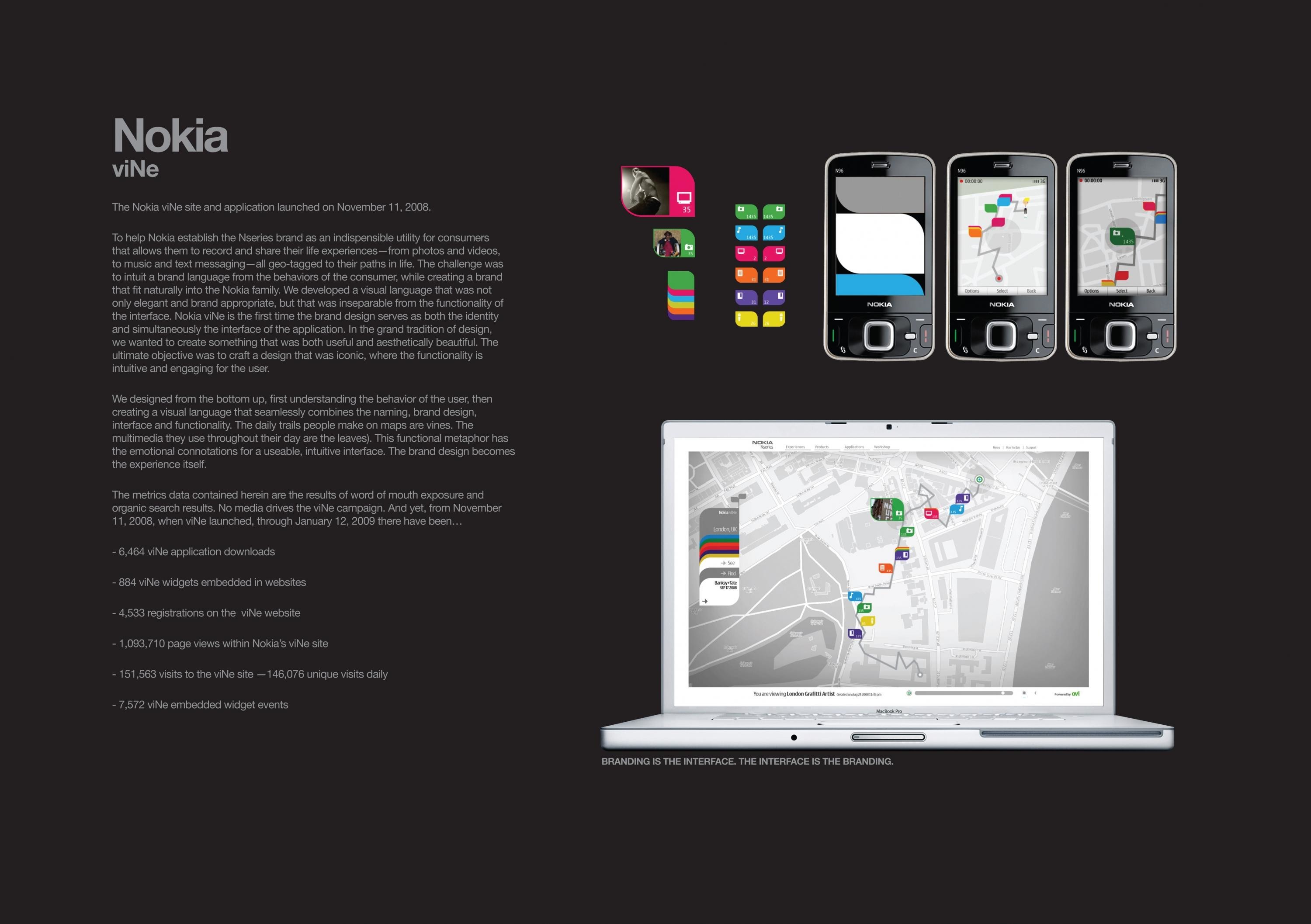

We wanted to create an algorithm that truly reads and understands movement. Additionally, we wanted this algorithm to not only identify what is performed, but also compare performed movement with a “master” movement so that users could understand if they were using consistent form, speed, and if they were moving with the right range of motion.

We wanted to simplify the way that doctors, coaches, athletes, patients, trainers, and general users recorded and understood human movement.We also wanted to open up new possibilities in new markets like tele-therapy and labor monitoring where a physical therapist or manager and identify and intervene if a user is performing an injury inducing movement. Much like a speech algorithm that can understand multiple languages, we wanted to create a tool that wasn’t limited to exercise, we saw huge oppotunties in physical therapy, defense, and workforce monitoring

Execution

Every unique human movement generates a unique waveform from a wearable device. The same way that Siri can differentiate between the words “dog” and “cat”, we can tell the difference between a bicep curl, lifting a box, and even gunfire.

Before the FocusMotion platform, motion data had to be hand entered, observed directly by a human, or recorded with expensive computer vision equipment. Our algorithm opens up new possibilities in new markets like tele-therapy and labor monitoring where a physical therapist or manager and identify and intervene if a user is performing an injury inducing movement.

A developer, whether a large company like Nike or a teenage developer in their home can download the SDK right now and begin tracking movements on any open wearable device – Android Wear, Microsoft Band, Apple Watch, and Pebble with more coming soon. We launched the fitness SDK back in Sept,

Outcome

We’ve built a robust and scalable platform that works across any device for any developer on any OS with any human movement, and as devices evolve, our algorithm will grow and adapt with them.

In the next 5-10 years, we’ll all begin to train differently, we all recover and rehabilitate differently, and we’ll work differently because we’ll have the new data correlations from tracked data from millions of people, and we’ll begin to understand what routines and recoveries work best for our personal physiologies. FocusMotion’s algorithm will be part of a solution that adds contextualization to biometric markers and helps open up new possibilities for personalized care, intervention, and evolution. Our data will be used to predict and prevent injury.

We’ve received 2MM in funding to date, but we’re seeking 2.5MM in our first, institutional Seed Round. Additionally, we’ve released a tool called “Creator” that permits any

Relevancy

A first in wearable history, FocusMotion enables any company to use any wearable to identify and track real human movements, understanding what specific movement a user is performing, how many times it was performed, and assessing how well it was performed. We take the waveforms from any wearable device and construct the language of human movement; in the same way that Siri can detect speech, we can detect the differences between a curl or moving a box in a factory or gunfire. FocusMotion also developed a machine learning tool that permits anyone to teach the algorithm a new movement.

Synopsis

For the past 10 years since Nike+, the sole focus of most wearable fitness tracking devices has been steps and sleep. We believed that there is more to human movement than steps. We believed that if you were to design a smarter algorithm, you could enable more markets and broaden the use case of every wearable device. Our goal was to create a hardware agnostic, OS agnostic platform that would discern the language of human movement and enable any developer to use not only our library of movements but also to add, curate, and deploy their own movement recognition using our machine learning tools. Like Siri understands speech, we would create a tool to understand the nuances of human movement to know what movement a user was doing, how many times it was performed, and how well it was performed.

More Entries from Innovative Technology in Innovation

24 items

More Entries from R/GA

24 items